XR STUDY

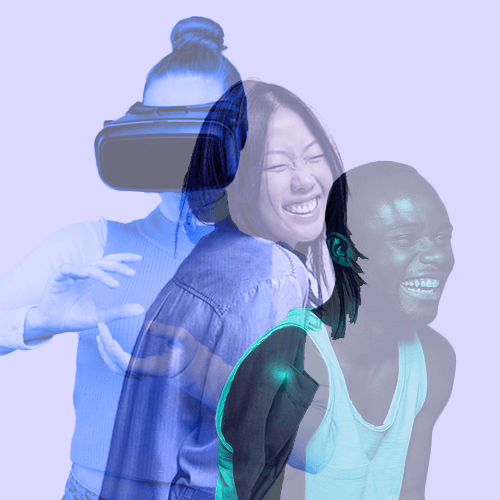

Integrating IRL "into" VR while augmenting VR multi-sensory elements "back in" IRL to design beyond the boundaries of spatial computing.

01 ► Refresh Your "Senses"

02 ▼ Opportunity

Current VR design prioritizes visuals and first-person perspectives with gesture interfaces, body movement, and sound to fill in the experiential gaps. Even though it’s designed to be fully immersive, set apart from ordinary life as an escape, health studies link escapism to addictive behaviors. How can design lessen the addictive elements and encourage IRL social experiences while providing a compelling experience?

By allowing users to scan their IRL reality into the VR experience and to augment digital back in reality using IOT amphitheater devices, this new way to approach multi-sensory spatial design enhances the VR group experience and keeps solo players grounded.

03 ► Features

Step into a familiar digital realm by integrating AI enhanced, real-world structures while a casted video mirrors the VR immersion for the audience and physical IOT controller-like devices augments the experience with reactionary lamps and speakers. Interactions between the VR and audience players guide their communication to reclaim the immersive, group experience while transitions from IRL to digital help ease participants between realms and limit unrealistic attachment.

0A ● HYBRID PLAYERS

To activate an IRL social experience, solo VR players interact with in-game “buttons” that are linked to the audience’s IRL IOT “buttons”.

The audience views the VR experience through a mirrored video cast with responsive sound and light projectors. Sound and light supplement verbal conversation as accessible communication tools that create authentic shared experiences in a hybrid amphitheater.

0B ● IRL INTEGRATION

To evolve VR immersion from an addictive to a balanced experience, imagine a space that feels familiar but is completely transformed into a surreal realm with generative AI imagery.

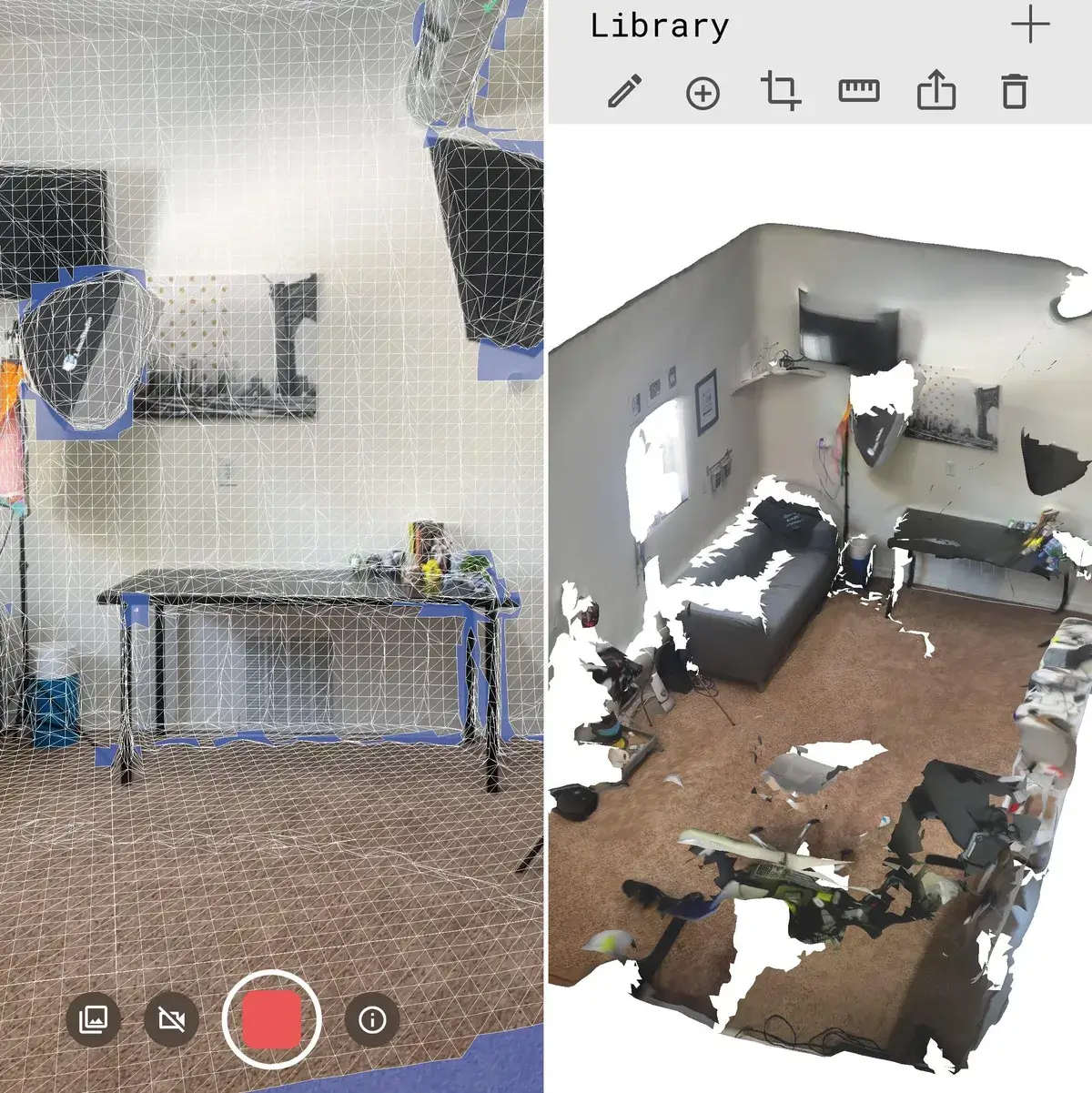

Using Lidar scanning technology to capture structures in the user’s IRL room, each VR level or world instantly becomes a hybrid realm, heightened by sensory elements like physics defying sound paired with familiar room dimensions.

0C ● RESTORATIVE TRANSITIONS

Ceremonies transition players back and forth from IRL to hybrid realms to ease participants’ transportation, limiting unrealistic attachment.

IRL room structures and sounds slowly fade in and out to help users stay in control of their spatial awareness. When players and the audience activate IOT devices, it’s a signal of the IRL link, a totem of your IRL awareness.

04 ▼ Research Phase

I conducted a two years long immersive study to uncover the best digital grounding techniques.

One blind person’s story of moving through the world without sight inspired insights around empathy through experience, reimaging visuals using sound patterns, and on how to mirror shared experiences.

When comparing in person miniature golf to virtual putt putt, I rediscovered the magic of transforming the everyday with imaginative environments and physics.

I also took on remote, group VR therapy sessions with participants all over the country. Remote experiences trick your brain into a sense of isolation and lowers cognitive performance; proving the need for hybrid engagements if improving mental function is desired.

05 ► Design Playbook

Mixed reality explorations to investigate a hybrid VR experience that perfectly blends in-person community and IOT engagement.

0A ● SPATIAL CONTROLLERS

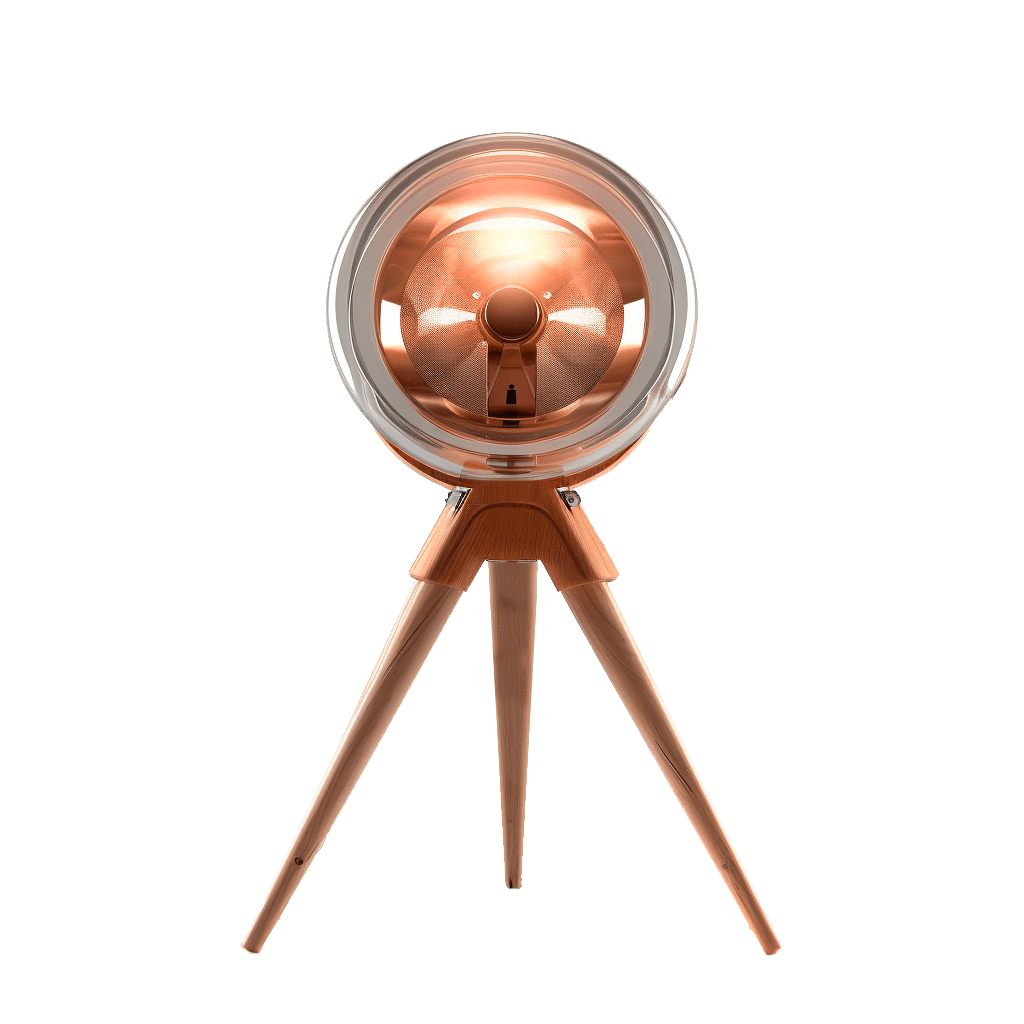

Building on the Unreal platform, I integrated the Hue API to communicate with Hue bluetooth devices.

These IOT devices became interactive “remote” elements to signal communication between players and manipulate cues for social navigation.

0B ● GENERATIVE SKETCHES

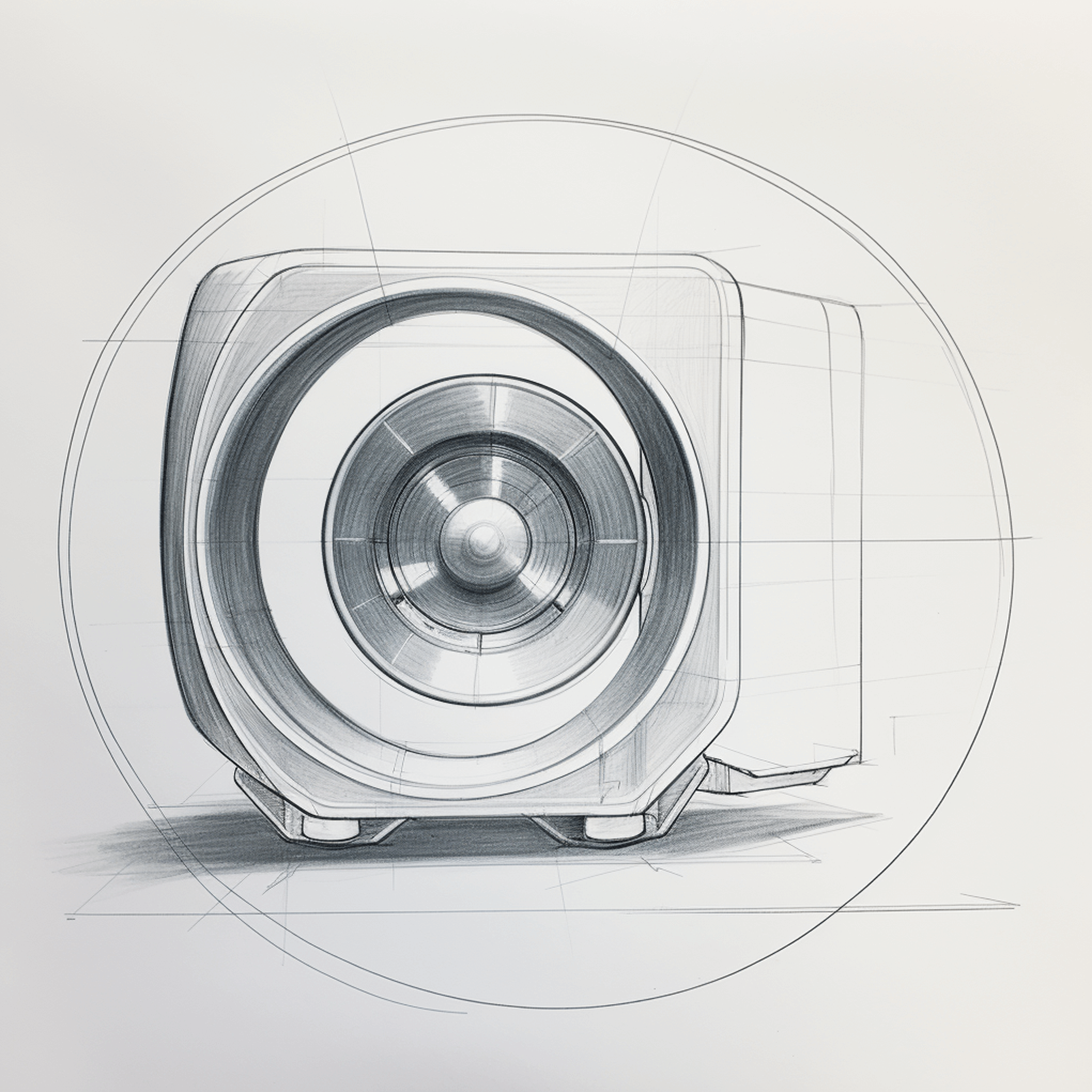

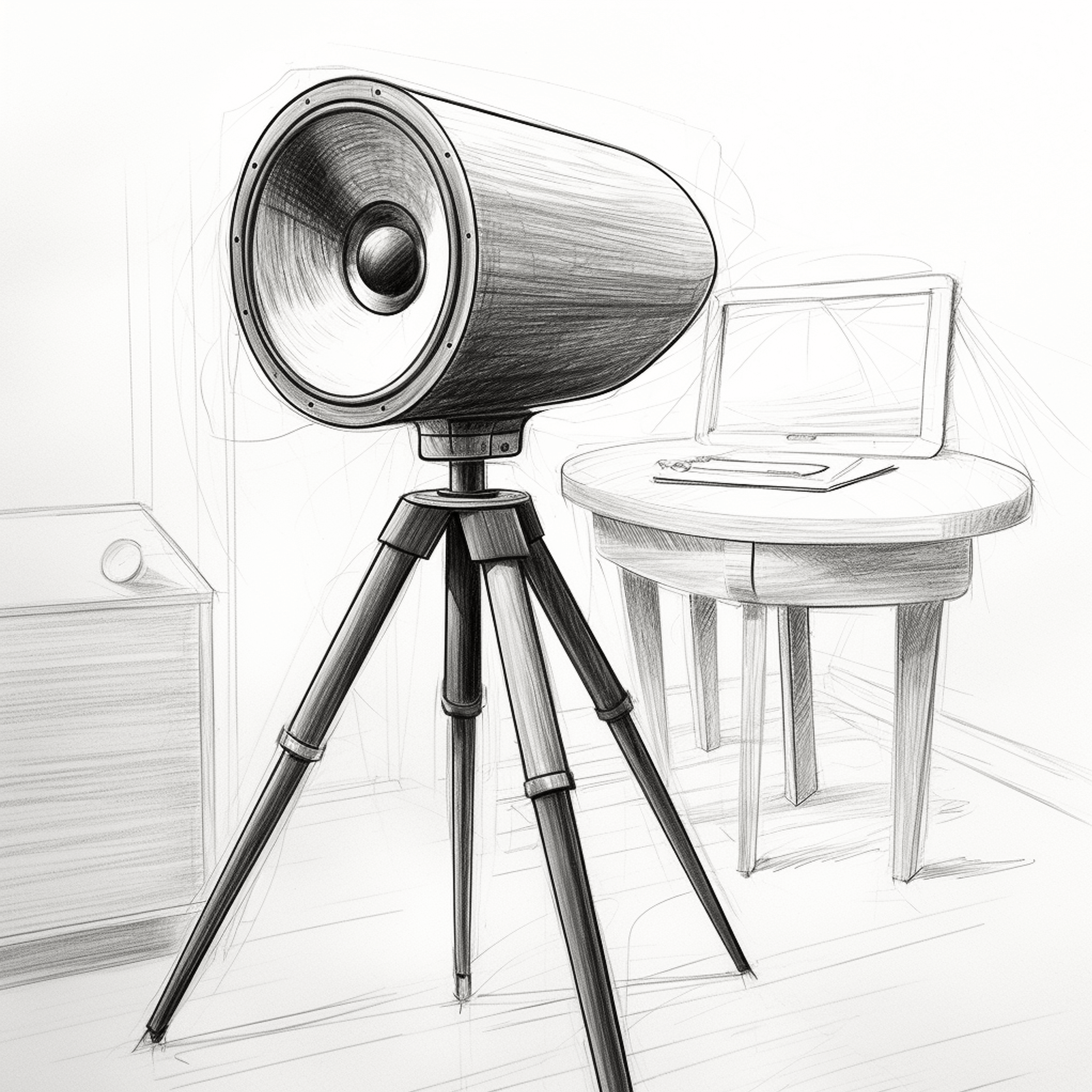

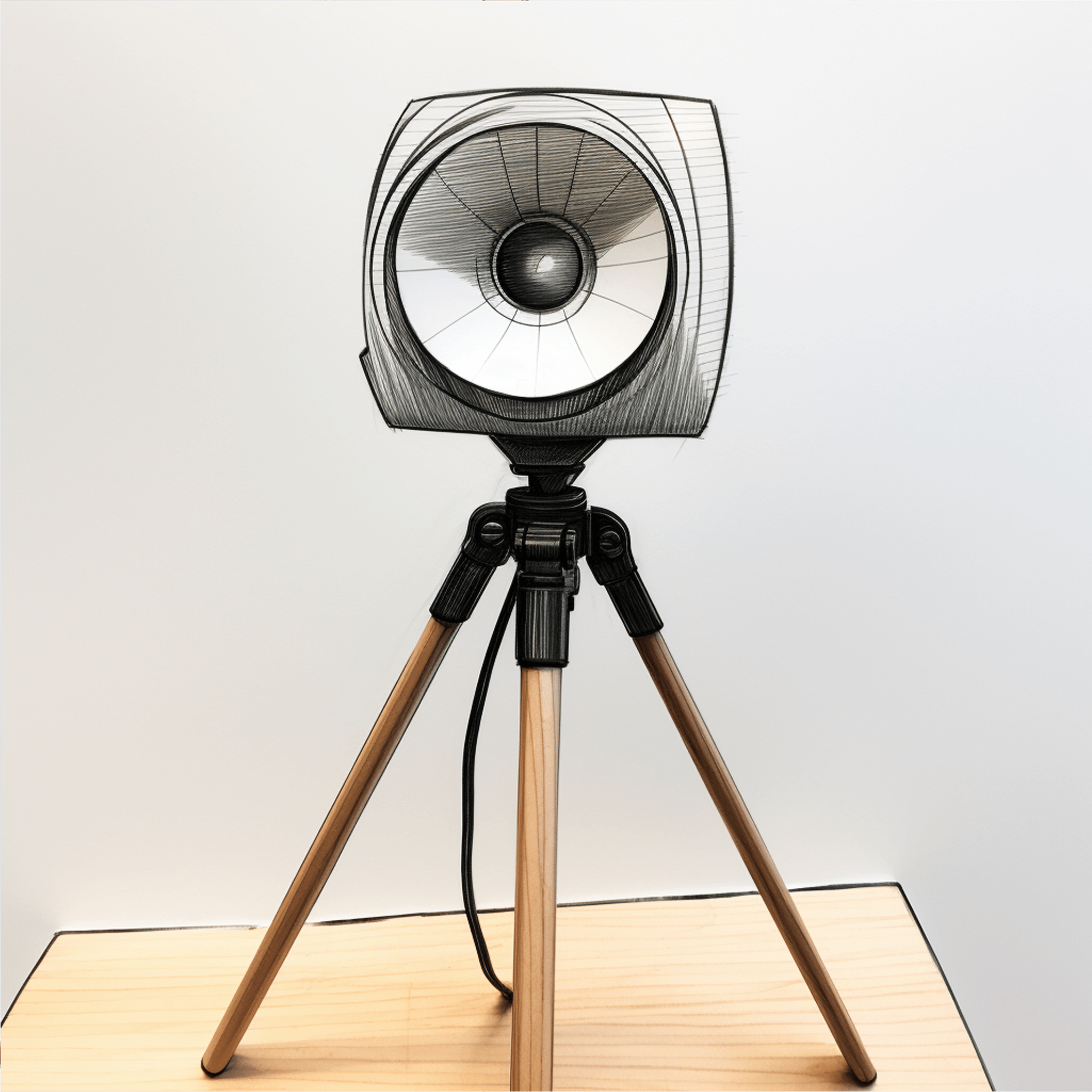

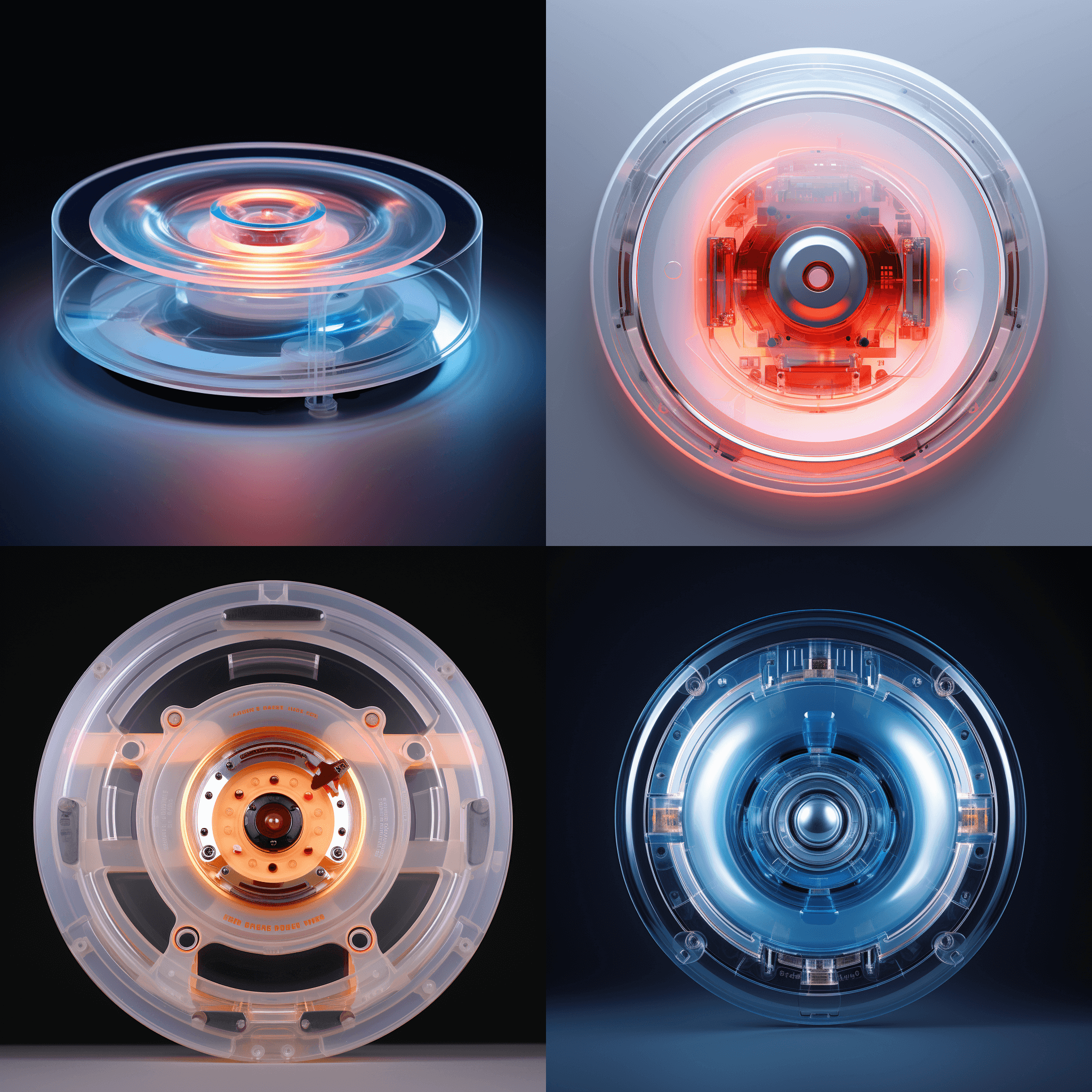

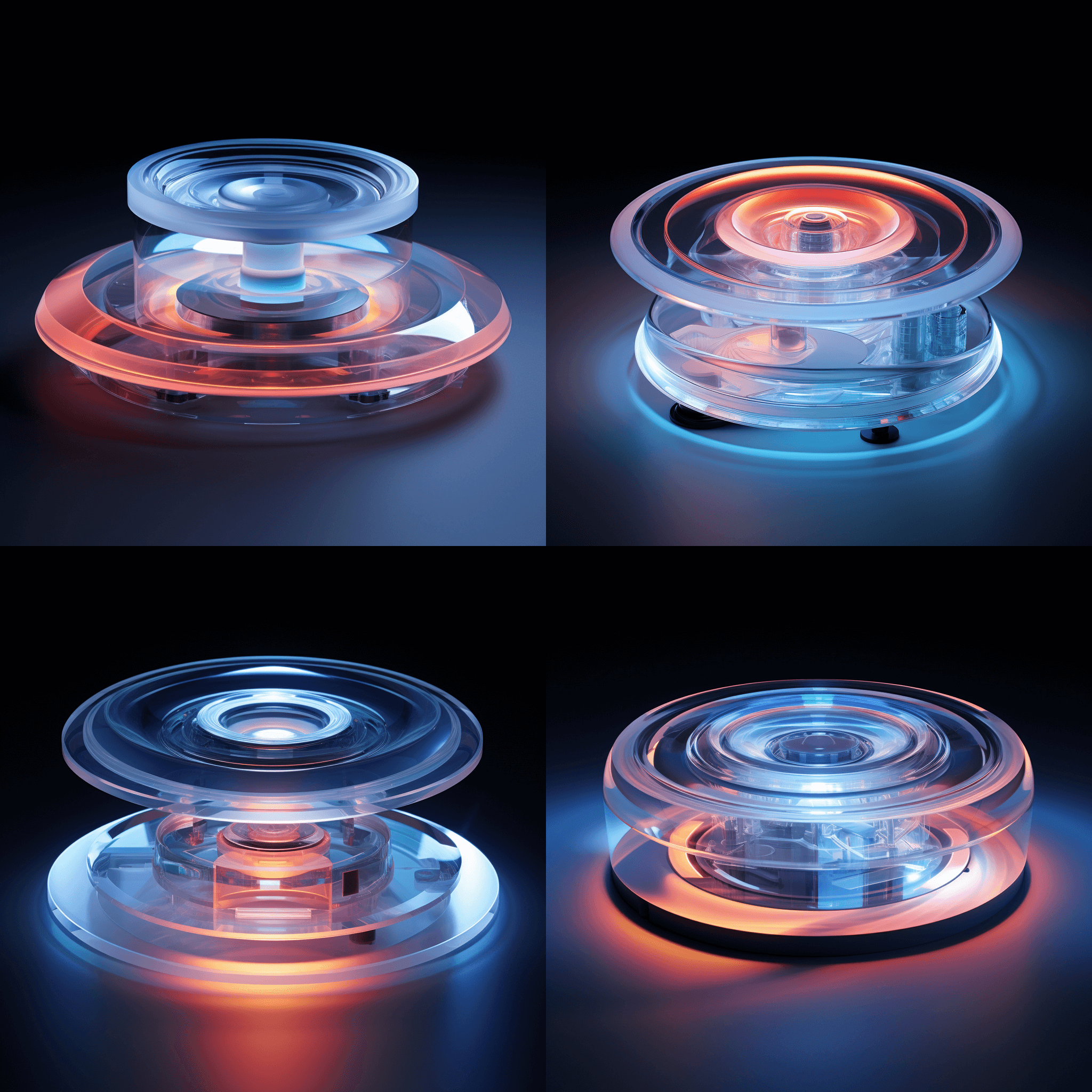

Through app test scans and generative sketch modeling, I explored merging lidar scanning, lamps, and speakers into one disk device for IRL game control and room scanning — unpacking designs that easily fit in any player space but quickly transports audiences into the magic of the game.

0C ● MOODJAMS

To prototype grounded immersion and hybrid communities, I used multi-sensory brainstorms to unpack the right textures, sounds, and engagements for the IRL and digital spaces.